Read a longer version of this article on the Unboxed Medium page.

At Unboxed, we want to design better services. We spend a lot of time understanding what our clients need to deliver a service in a way that works for both service users and for the people who manage it day to day.

MVP, or minimum viable product, is one of the approaches we use to get our clients from insights (learning about what users’ want and need) to implementation (building or buying a solution). But we’ve found that people understand ‘MVP’ in different ways, particularly in the public sector. This can lead to tension and misalignment about what and how we’re delivering.

So what does it mean? And can MVP ever work in the public sector?

A definition of MVP

MVP has been somewhat stretched and distorted since its beginnings in the world of software development.

In simple terms, MVP, or minimum viable product, is about building the minimum features that allow us to deliver some value to users. In other words, users can use the product to do something that is useful to them. We need to deliver enough value to get feedback and better understand what users want and need. Then we can reflect and build the next feature or improve the features we’ve got.

We end up with a leaner product, it costs less and it’s available sooner.

MVP is not the thing you are building. It’s a way of getting there. The name — minimum viable product — doesn’t help much. But the danger lies in building something without doing all the experimentation and work to get to that point.

MVP in the public sector

We work with many public sector organisations, from central Government to local authorities and NHS Trusts, where the appetite for risk is, understandably, pretty low. From the start, we need to ask some questions to understand the culture:

- what is the client’s appetite for building products from scratch versus buying products off the shelf?

- what is their understanding of MVP?

- are they prepared for our experiments to fail?

We often end up with two big challenges to MVP:

- A lack of capacity or appetite amongst staff (internal users) to engage with the MVP

- A focus on product over approach

Doesn’t MVP mean more work?

Say we’re building a new tool for planning officers to process planning applications. These are busy people with a lot of admin who need software that, as a minimum, allows them to do their job. Ideally, it would make their job easier and more efficient.

It’s a complex project. We’re trying to engage many different councils at once and manage lots of political stakeholders. But we can only build a little piece of the puzzle for them. For planning officers, they need this little piece to do everything the current system does.

There are two things that might happen during MVP:

- we ask them to use a tool that, at the moment, does part of their job but not all of it. So they need to find another way of doing those other things, perhaps on bits of paper or spreadsheets or some other workaround.

- we ask them to use the new tool for some parts of their job. But they still need to do all the other parts of their job in the old system, so they end up doing things twice in two different systems that work in different ways.

Either way, it’s more work for them. They may not understand the principles of MVP. They’re probably not even interested. They just want the finished product so they can get on with their job.

When people are already working to capacity, it’s hard to give them the time and space to engage with an experimental approach in a positive way. It’s no wonder that a council IT Director recently told us that, “When people [working in councils] hear MVP, they think ‘crap solution’. They don’t want MVP.”

It’s different in the entrepreneurial world where it’s the investor who is taking all the risk. In the public sector, you’re expecting your employees to invest in the MVP when they’re too busy doing their day jobs. They get the same salary regardless. Unlike an investor, there’s no chance of a big pay off further down the line.

We need to understand the reality for people who are actually using the tool. We need to understand their working culture and what the MVP process will mean for their day to day. Then we need to help them understand the value that they can get from it, for themselves and for the services they deliver.

A fixation on products

In the public sector, many, many employees are already using software which doesn’t do the job perfectly. The company that makes that software has built something that mostly works for lots of customers, it makes money and they can adapt it and sell it to others.

But it shouldn’t be about the product. A council should be able to focus on the service they want to deliver to users. What do they need that service to do? What impacts does it need to create for users?

MVP allows the council to prototype solutions, see what works, then go to the suppliers and tell them — these are the outcomes we want, can you build something that will deliver these outcomes? Starting with the product is the wrong way around.

Too often, ‘MVP’ is couched in a mindset of delivering a product. What we should be saying is, “How can we use the MVP approach to improve or test innovations in our service?” A product may be part of that, but it exists to help us deliver a better service to residents or customers or whoever our users are.

How do we create an MVP mindset?

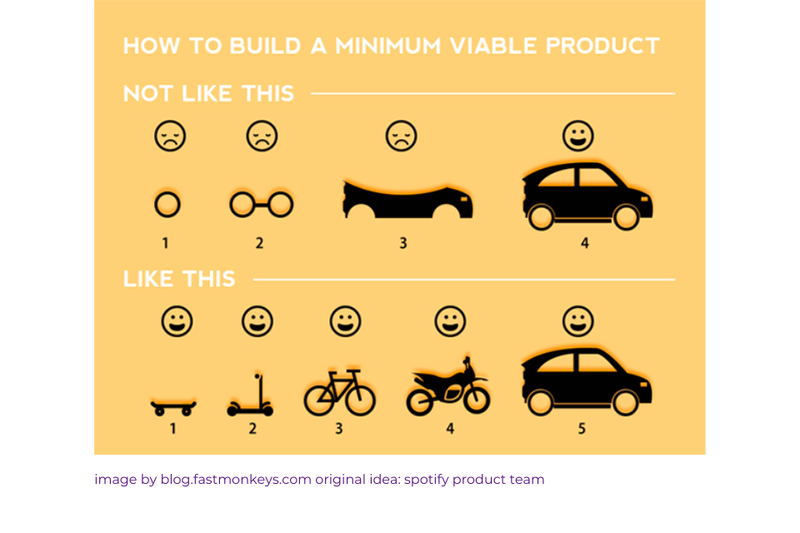

Google ‘MVP’ and you’ll find a diagram that shows two different approaches to building a car. The first starts with wheels, then a chassis, then ends up with a car. The user can’t use the product until it’s finished. The second starts with a skateboard then moves through a scooter, a pedal bike and a motorbike before getting to the car. The user is happy as they can use the product from its first inception as a skateboard. This diagram shows two things:

- MVP is about building something that people can use right from the start. You can learn from how people use it, then iterate and improve.

- MVP should be based on the outcome for the user, not the solution. The first option assumes the user needs a car. The second option is addressing the user’s need to move around. Through trial and error, they end up with a car.

The diagram highlights the role of MVP in reducing risk. You can try something small, learn what works, invest a bit more, learn some more, build some more and so on. In the alternative model, you’re building a whole car which no one can use until the whole thing is complete.

But this doesn’t always work, particularly in the public sector. It’s hard to say to people that you’re going to give them a skateboard when they’ve already got a car. The car might be a bit rubbish and creaky but it’s better than a skateboard.

An example from the public sector

MVP can work in the public sector, but the appetite and the funding have to be right.

Here’s an example of where MVP did work in an NHS Trust.

Through a service-wide discovery process, we had identified an opportunity for a better referral service within an NHS hospital.

Problem:

When patients arrive for an appointment the referral information can be incomplete. That makes the first appointment with a consultant like an expensive triage. The consultant needs to work out whether the patient even needs to be there.

Our hypothesis:

If we could get better information from patients before they attended, we could reduce the amount of wasted appointments by triaging patients to the right care, faster.

This might mean building an online form, integrating with the booking system — lots of work. We weren’t yet convinced that it was the right thing to do. We needed to know:

- would patients be able to give us the information we needed?

- would that information be useful for a clinician?

Experiment 1:

We went to the waiting room and asked patients to fill in a survey on a paper form. After that, the patient had their face to face appointment with the clinician as planned.

A different clinician then looked at our survey results from 100 patients. He compared the decisions made in their face to face appointments to the decision he would have made based on the information in the paper form. He was happy that these decisions lined up and that the information that patients had given would be useful in advance of the appointment. The clinicians could use that information to triage patients to the right care, reducing the amount of patients that needed to see a consultant and reducing wasted time for both patients and clinicians.

Experiment 2:

We’d tested the form with bored patients sitting in a waiting room. We needed to know whether they would complete it online in advance of their appointment.

We created an online survey with a free tool. We sent it to patients a couple of weeks before their appointment and they filled it in, giving us broadly the same information as they had in the paper survey.

What we learnt

- people will fill it in an online form

- we know that the data is useful for clinicians

The Trust was then more confident to invest money in a product that would do the same thing in a more efficient way.

Outcome

The Trust used some existing tools and an existing supplier to build the tool, and it worked. Unboxed didn’t even build the product at the end of it.

We delivered value by helping the Trust to test an idea and understand what they needed to build before they commissioned a supplier. This was the MVP.

Setting the environment for MVP

We meet many public sector clients who get MVP and who want to do it well. How can we support them to get the most value from an experimental approach?

Start the conversation early

Use your project kick off meeting to understand what the client’s perceptions are of MVP. Set out the value of the approach. Listen to what they think it means for their role and their involvement in the project. If people are unsure, or confused, or hostile, address that up front.

As we’ve discussed in another blog, service design works best when we build relationships that allow people to question and challenge the approach from the start. This way you can address concerns and bring people on the journey with you, rather than having to deal with push-back mid way through a project.

Get the product owner on board

We’ve worked with POs who use the term MVP repeatedly — ‘let’s build the MVP’, ‘we’re building an MVP.’ This becomes the basis for the project — this is the thing we’re building — so that’s what their team understand by it.

We need to do more to challenge that thinking early on. We need to help POs to explain to their team that MVP is not what they are delivering but how.

Be honest

MVP can be scrappy, it can mean duplicating work while features are being tested. We might be asking people to try out a prototype that does some of the things they need but not all of them, while still using their old system. If people don’t understand how this is going to help them in the long run, it just becomes a frustration.

Build in time for training

We run an agile training course for clients. It includes a whole section on MVP. It’s something we do early on in a project to help everyone understand the value of an agile approach. It also gives people a safe space to ask questions - we help people to create their own language around MVP and find the bits that they can take into their work.

MVP is a journey

MVP represents the journey from insights and ideas to a product that you know is going to work, that you know is going to provide better services to your customer and which allows your team to deliver that service efficiently and effectively. MVP is a way of innovating and understanding how technology can help to fill that gap.

At Unboxed, we try to fill that gap. We take the insights and design solutions, we prototype and test them, we learn more about what it is you and your users need. Then you can build the right product, or buy it if it already exists. MVP is a means of getting you to that point — to making the right decision with less risk and more confidence.

This article is based on an interview with Martyn Evans, our Head of Product and Marie Ferreira, one of our Delivery Managers.

If you're interested in our approach, follow us on LinkedIn, Twitter, or Instagram

If you think we could help you improve your services, get in touch at hello@unboxed.co